With loggers we have something called as an Appender. Simply defined an appender is a component responsible for taking a log request, building the log message in a suitable format and sending it to the output device.

So when we need to log to the console we have a ConsoleAppender. For file based logging we use FileAppenders and for logging to a database we have the DatabaseAppender. Logging to a database... ? For what ? Well you log to files, you want better persistence than you log to db.

Actually there are applications where the logs are of a high priority and warrant being placed in database. For instance I once worked with a client who logged all his web service request and response xml to a database table. He provided a screen to the table which allowed the support team to lookup a particular request and its corresponding xml when debugging issues.

I decided to try the database appender with Logback API. The first step was to download the necessary jars:

The database appender here requires the JDBC driver, the jdbc url and the db credentials. An additional property is the connectionSource which is actually the type of Connection wrapper that we would like to use. Logback provides a few options here and I went with the DriverManagerConnectionSource class.

The next step was to write a test class to test the code:

This resulted in three tables:

If we run the sample code now ( with the jdbc driver jar) we can see that the records were created:

As seen from the entries:

Is this a must have feature in our projects ? Logging as it has costs. Database logging is more expensive. In case of logback the log entries will be created in atleast one table for every log message. If its a exception than that becomes multiple entries in a second table. In case we have added values in our MDC map, than there will be a correspondingly high number of insert calls on the logging_event_property table.

From the Logback docs:

So when we need to log to the console we have a ConsoleAppender. For file based logging we use FileAppenders and for logging to a database we have the DatabaseAppender. Logging to a database... ? For what ? Well you log to files, you want better persistence than you log to db.

Actually there are applications where the logs are of a high priority and warrant being placed in database. For instance I once worked with a client who logged all his web service request and response xml to a database table. He provided a screen to the table which allowed the support team to lookup a particular request and its corresponding xml when debugging issues.

I decided to try the database appender with Logback API. The first step was to download the necessary jars:

- logback-classic-1.0.13.jar

- logback-core-1.0.13.jar

- slf4j-api-1.7.5.jar

<?xmlversion="1.0"encoding="UTF-8"?>As seen here, I have created two appenders - a console appender and a database appender.

<configuration>

<appender name="stdout" class="ch.qos.logback.core.ConsoleAppender">

<!-- encoders are assigned the type ch.qos.logback.classic.encoder.PatternLayoutEncoder by default -->

<encoder>

<pattern>%d{HH:mm:ss.SSS} [%thread] %-5level %logger{5} - %msg%n

</pattern>

</encoder>

</appender>

<appender name="db" class="ch.qos.logback.classic.db.DBAppender">

<connectionSource

class="ch.qos.logback.core.db.DriverManagerConnectionSource">

<driverClass>org.postgresql.Driver</driverClass>

<url>jdbc:postgresql://localhost:5432/simple</url>

<user>postgres</user>

<password>root</password><!-- no password -->

</connectionSource>

</appender>

<!-- the level of the root level is set to DEBUG by default. -->

<root level="TRACE">

<appender-ref ref="stdout"/>

<appender-ref ref="db"/>

</root>

</configuration>

The database appender here requires the JDBC driver, the jdbc url and the db credentials. An additional property is the connectionSource which is actually the type of Connection wrapper that we would like to use. Logback provides a few options here and I went with the DriverManagerConnectionSource class.

The next step was to write a test class to test the code:

publicclass TestDbAppender {

privatestaticfinal Logger logger = LoggerFactory.getLogger(TestDbAppender.class);

public TestDbAppender() {

logger.info("Class instance created at {}",

DateFormat.getInstance().format(newDate()));

}This would work - but if it did were would the logs go ??I looked into the documentation for Logback and according to the documentation:

publicvoid doTask() {

logger.trace("In doTask");

logger.trace("doTask complete");

}

publicstaticvoid main(String[] args) {

logger.warn("Running code...");

new TestDbAppender().doTask();

logger.debug("Code execution complete.");

}

}

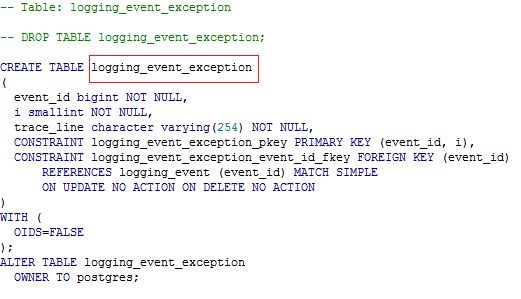

"The DBAppender inserts logging events into three database tables in a formatAccordingly I picked up the script files for the same.

independent of the Java programming language. These three tables are logging_event,

logging_event_property and logging_event_exception. They must exist before DBAppender can be used."

This resulted in three tables:

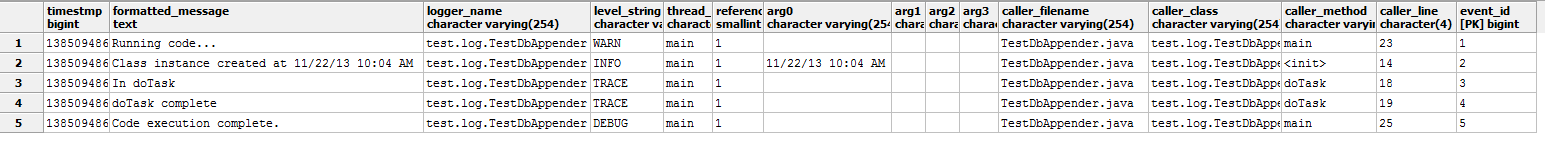

If we run the sample code now ( with the jdbc driver jar) we can see that the records were created:

10:04:25.943 [main] WARN t.l.TestDbAppender - Running code...While this was the console appender, The db appender wrote entries to the table:

10:04:26.001 [main] INFO t.l.TestDbAppender - Class instance created at 11/22/13 10:04 AM

10:04:26.036 [main] TRACE t.l.TestDbAppender - In doTask

10:04:26.071 [main] TRACE t.l.TestDbAppender - doTask complete

10:04:26.104 [main] DEBUG t.l.TestDbAppender - Code execution complete.

As seen from the entries:

- Every log call is treated as a log event. The entry for the same is added in the logging_event table. This entry includes the timestamp, actual log message, the logger name etc among other fields.

- If the logger under consideration has any Mapped Diagnostic Context or MDC or context specific values associated than the same will be placed in the logging_event_property table. Too many values here will definitely slow down the log process.

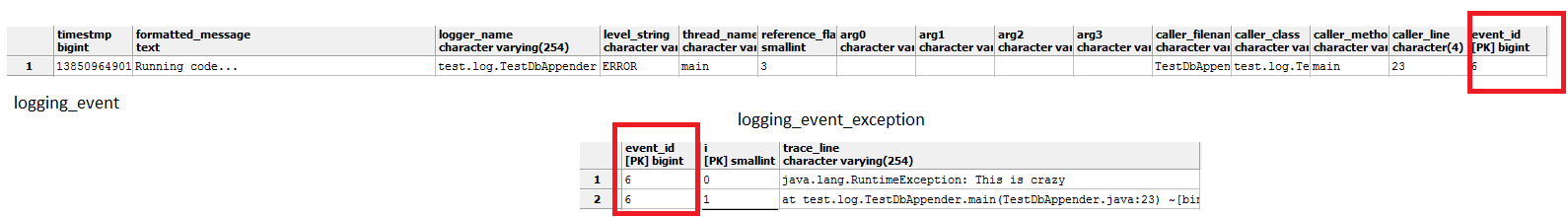

- Messages of type error often have stack traces associated with it. These are logged separately in the logging_event_exception table with each trace being treated as a separate record.

publicstatic void main(String[] args) {The consoleAppender printed:

logger.error("Running code...", newRuntimeException("This is crazy"));

}

10:31:30.198 [main] ERROR t.l.TestDbAppender - Running code...The table logs :

java.lang.RuntimeException: This is crazy

at test.log.TestDbAppender.main(TestDbAppender.java:23) ~[bin/:na]

Is this a must have feature in our projects ? Logging as it has costs. Database logging is more expensive. In case of logback the log entries will be created in atleast one table for every log message. If its a exception than that becomes multiple entries in a second table. In case we have added values in our MDC map, than there will be a correspondingly high number of insert calls on the logging_event_property table.

From the Logback docs:

Experiments show that writing a single event into the databaseAs seen the result depends on how standard your PC is. If your hardware can afford this hit and the application warrants this level of log maintenance than this is a cool feature. With my client, his debugging headaches were eased to a large extent using the database appender feature. This feature is also available with Log4j.

takes approximately 10 milliseconds, on a "standard" PC. If

pooled connections are used, this figure drops to around 1 millisecond.